From a BBC report, coining the term “AI Distortion”:

Our researchers tested market-leading consumer AI tools – ChatGPT, Perplexity, Microsoft Copilot and Google Gemini […] The team found ‘significant issues’ with just over half of the answers generated by the assistants. The AI assistants introduced clear factual errors into around a fifth of answers they said had come from BBC material. And where AI assistants included ‘quotations’ from BBC articles, more than one in ten had either been altered, or didn’t exist in the article. […] AI assistants do not discern between facts and opinion in news coverage; do not make a distinction between current and archive material

This roughly mirrors my own experience, although I did not see clear patters that could be boiled down to simple rules.

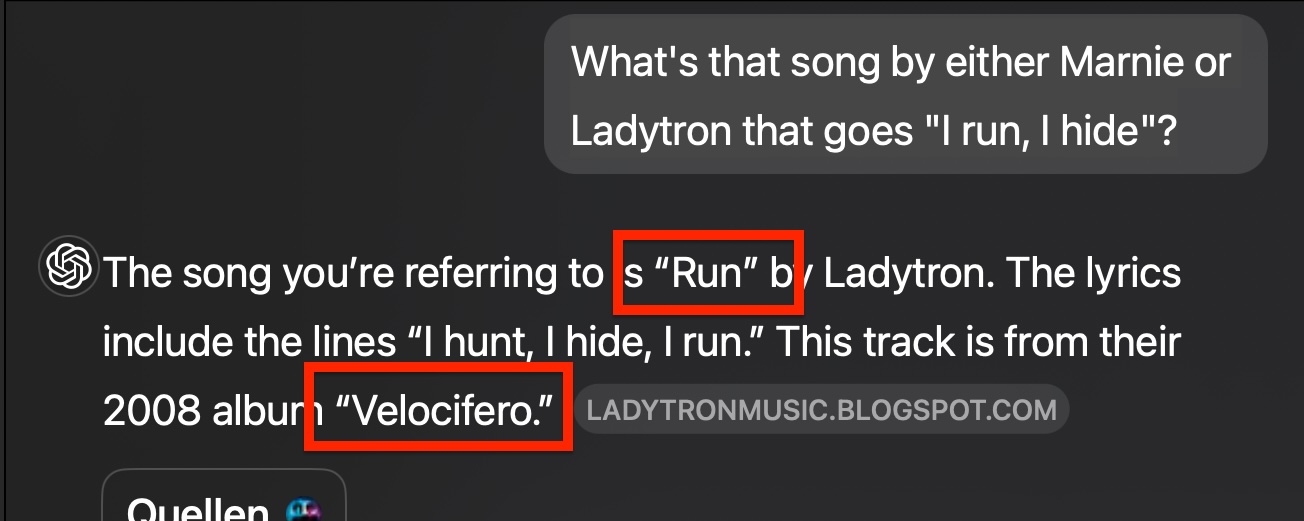

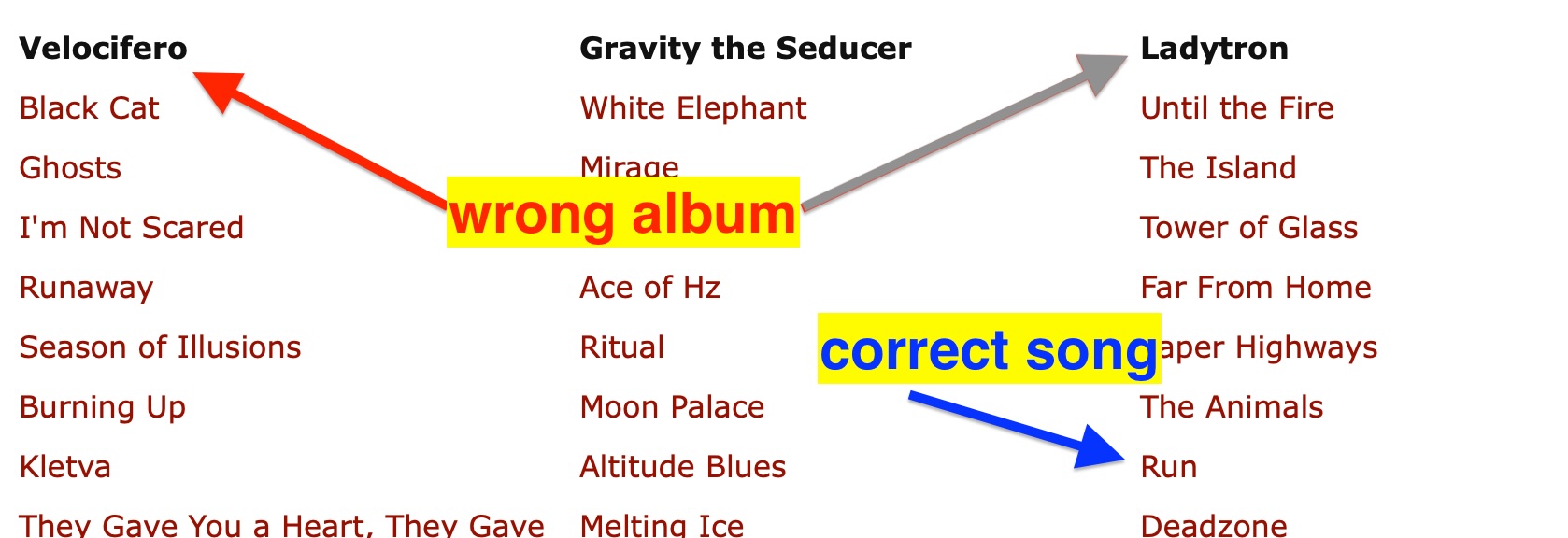

One source of errors are tables on websites: in the following example, ChatGPT got lost in the row/column arrangement that makes up the (non-semantic, div-based HTML) table: ChatGPT:

Misrepresented facts on the cited source website:

How I use these tools instead: rather than posing questions and relying on the answers presented, I treat them more like an old-fashioned search engine, e.g. “Is there evidence that …”, “Find me the article that …” etc. - and then I visit the attributed pages to verify. Quite often, the sources do not support the AI answer.

Aside from Deep Research perhaps, there is no good or even “better” option. Alas, I haven’t tried Kagi yet - I would love to hear actual experiences with that one!