Notes on the Inspire session on „Client AI at Scale“:

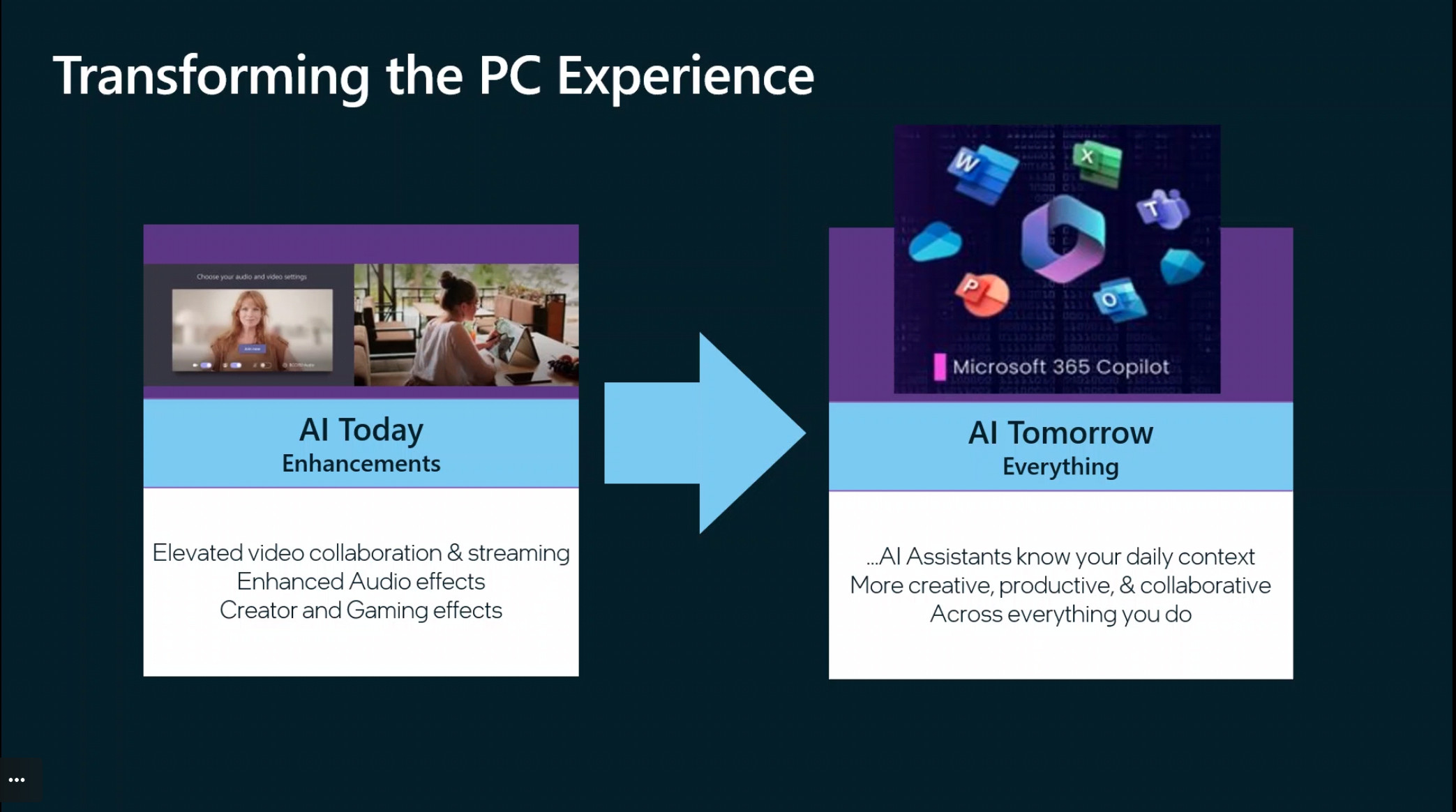

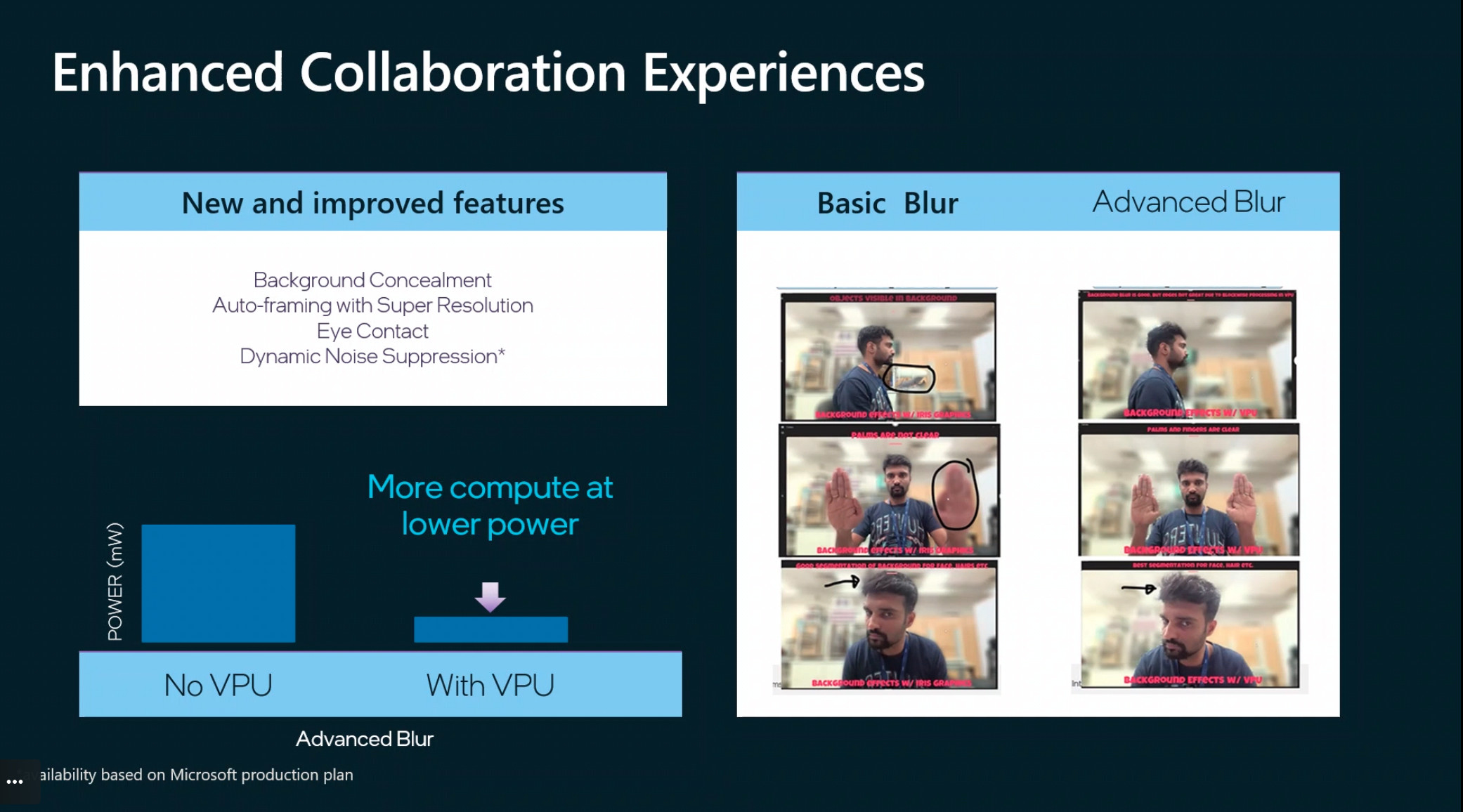

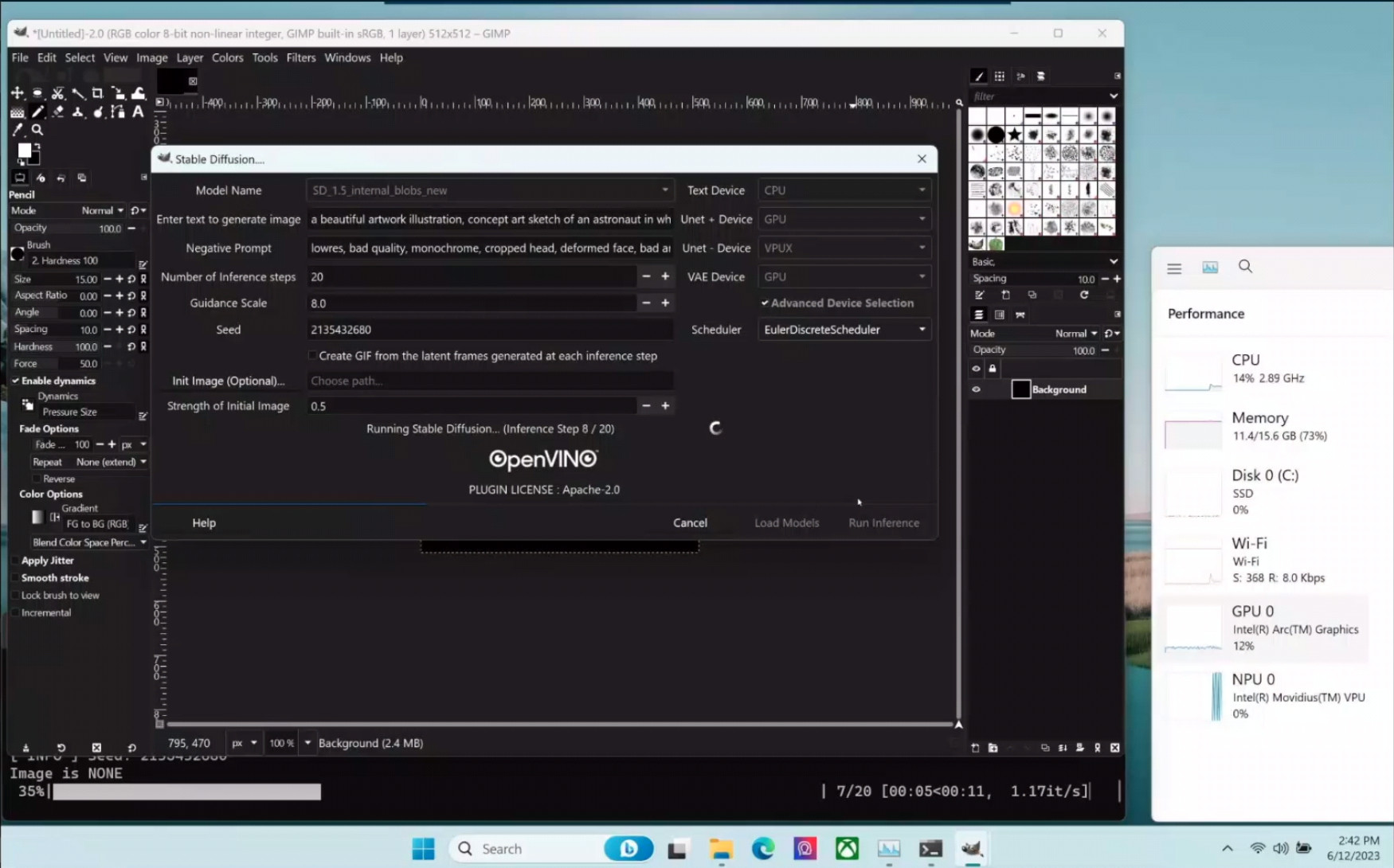

- Minor quality improvements today, more energy efficient (screenshots #1, #3)

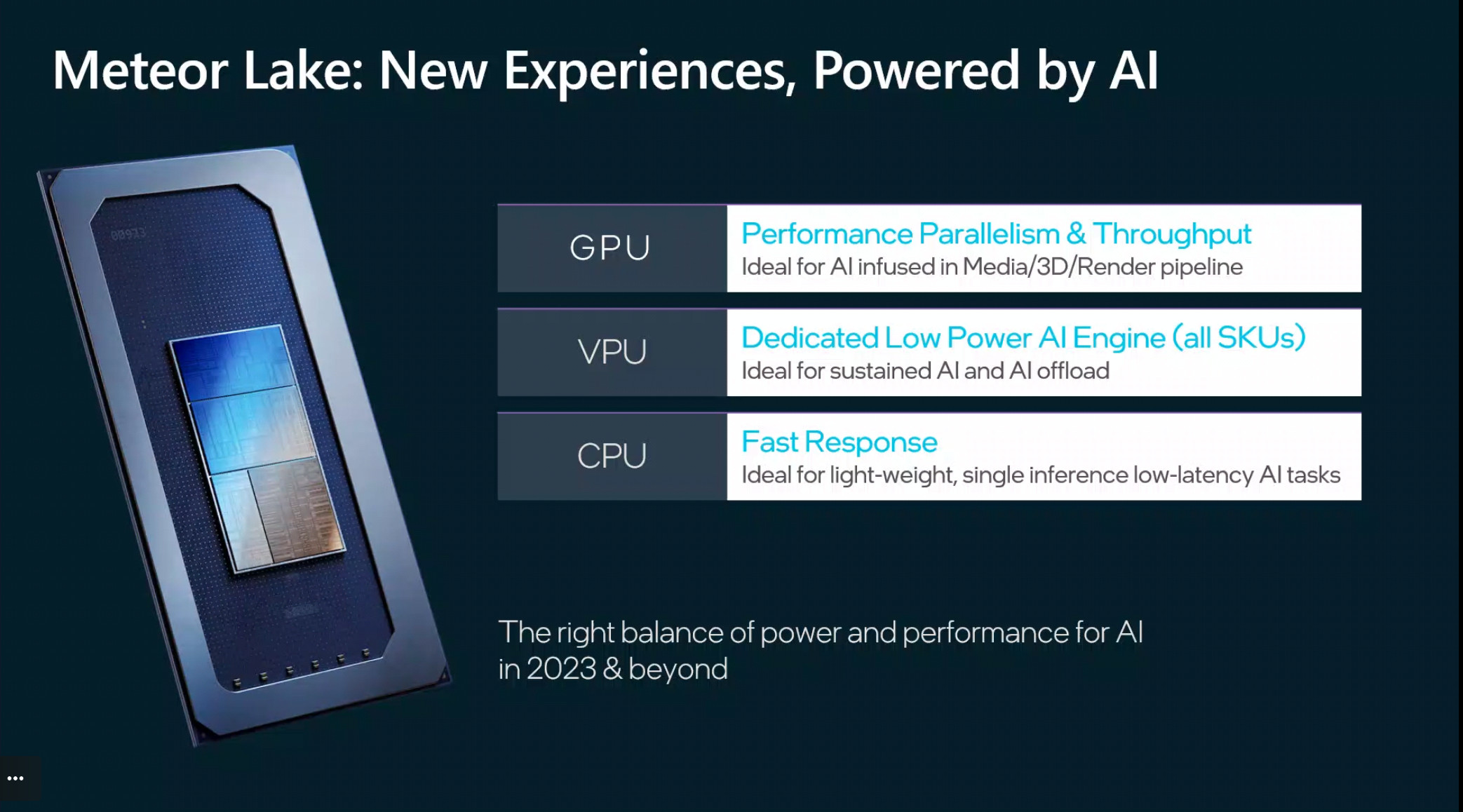

- Upcoming Intel Meteor Lake to include equivalent to Google TPU, Apple Bionic Engine: „VPU“ (screenshot #2)

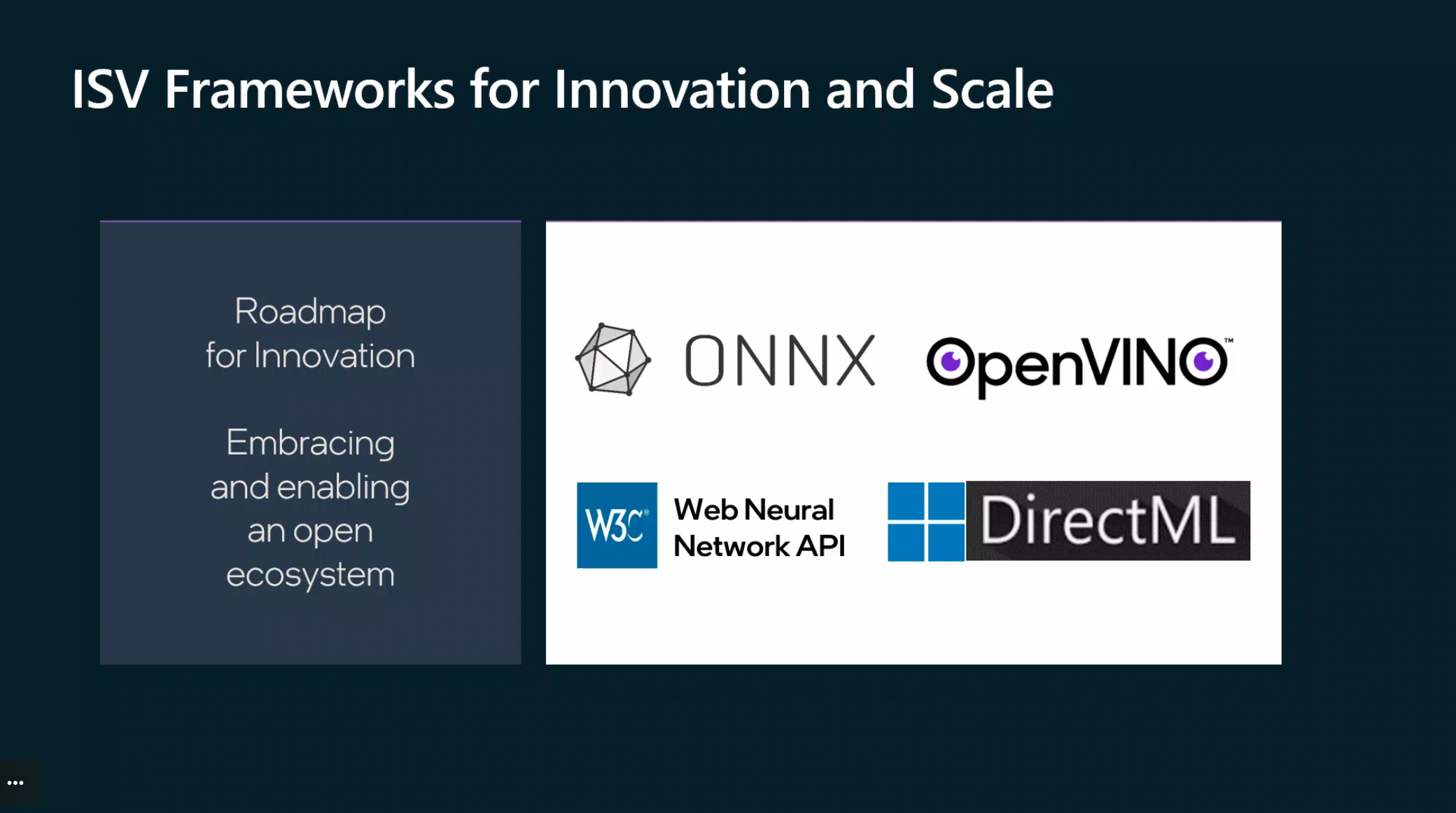

- Some examples of Frameworks given, including DirectML (screenshot #4). Questions about local Llama 2, other Windows APIs for local inference unanswered

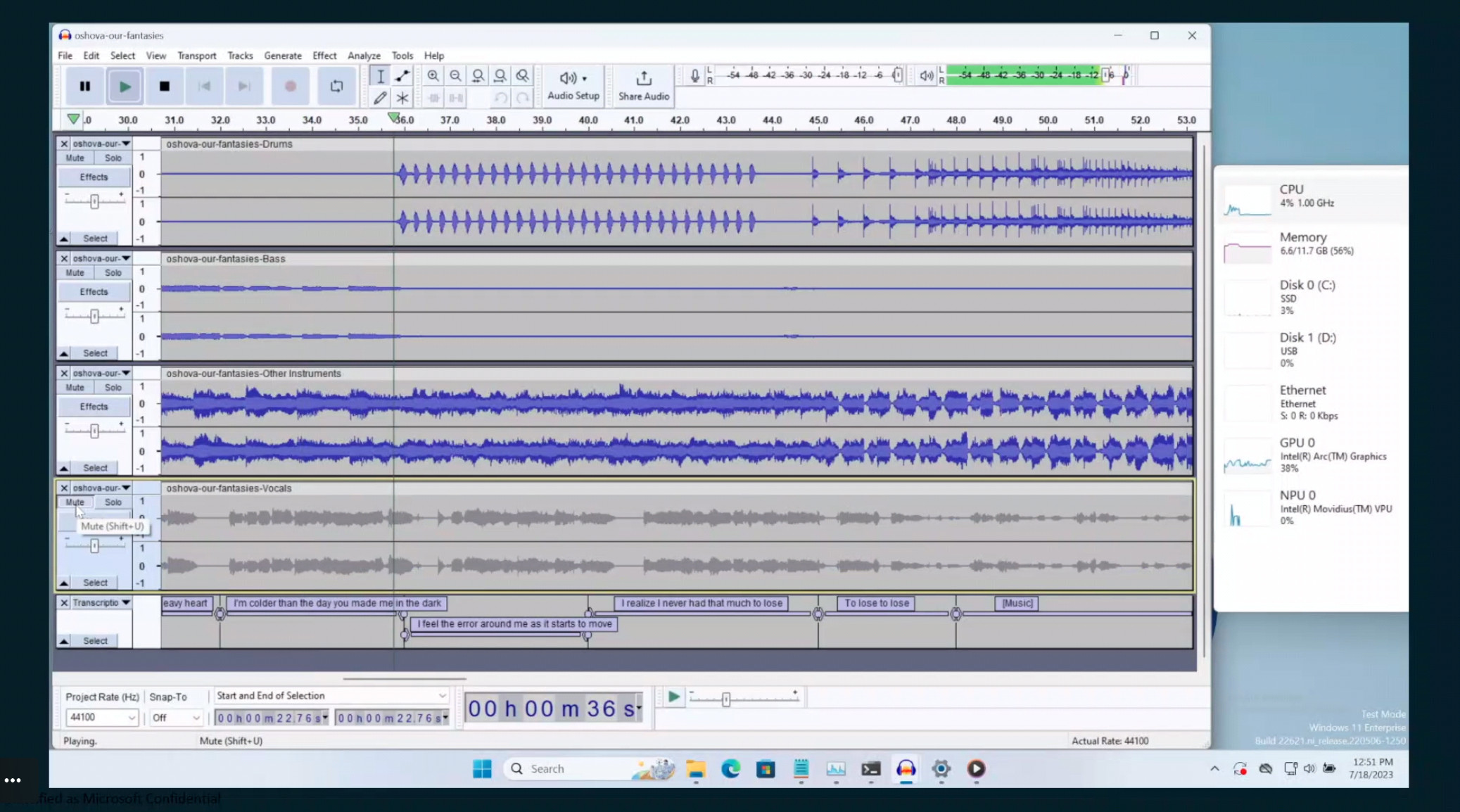

- Demos comprised of: some Adobe tool to add cut marks/segment splits to the timeline of a video, Stable Diffusion in gimp (screenshot #5), Music track separation and voice track transcription through OpenAI Whisper in Audacity (screenshot #6): local karaoke machine! 🥳

- Q: do any of the available Intel x86 chips support the extensions? A: Raptor Lake! (🤔)

Presenters:

- John Rayfield, VP & GM Client AI, Intel

- Max McMullen, Sigma Group Engineering Manager, Microsoft

(Note to the ultra-high precisionalists: “LLaMA 2” has been rebranded to “Llama 2”, so that’s also correct use now. 😉)