Popular wisdom holds that Language Models are “not made for computation” - and such is thus best avoided. This is backed by this study that confirms limitations also with o1 (albeit much higher).

This does not hold true for “Language Models like ChatGPT”, e.g. as claimed by Tech Crunch, however: as an AI System, it extends beyond the basic Large Language Model, and does therefore not share the same restrictions.

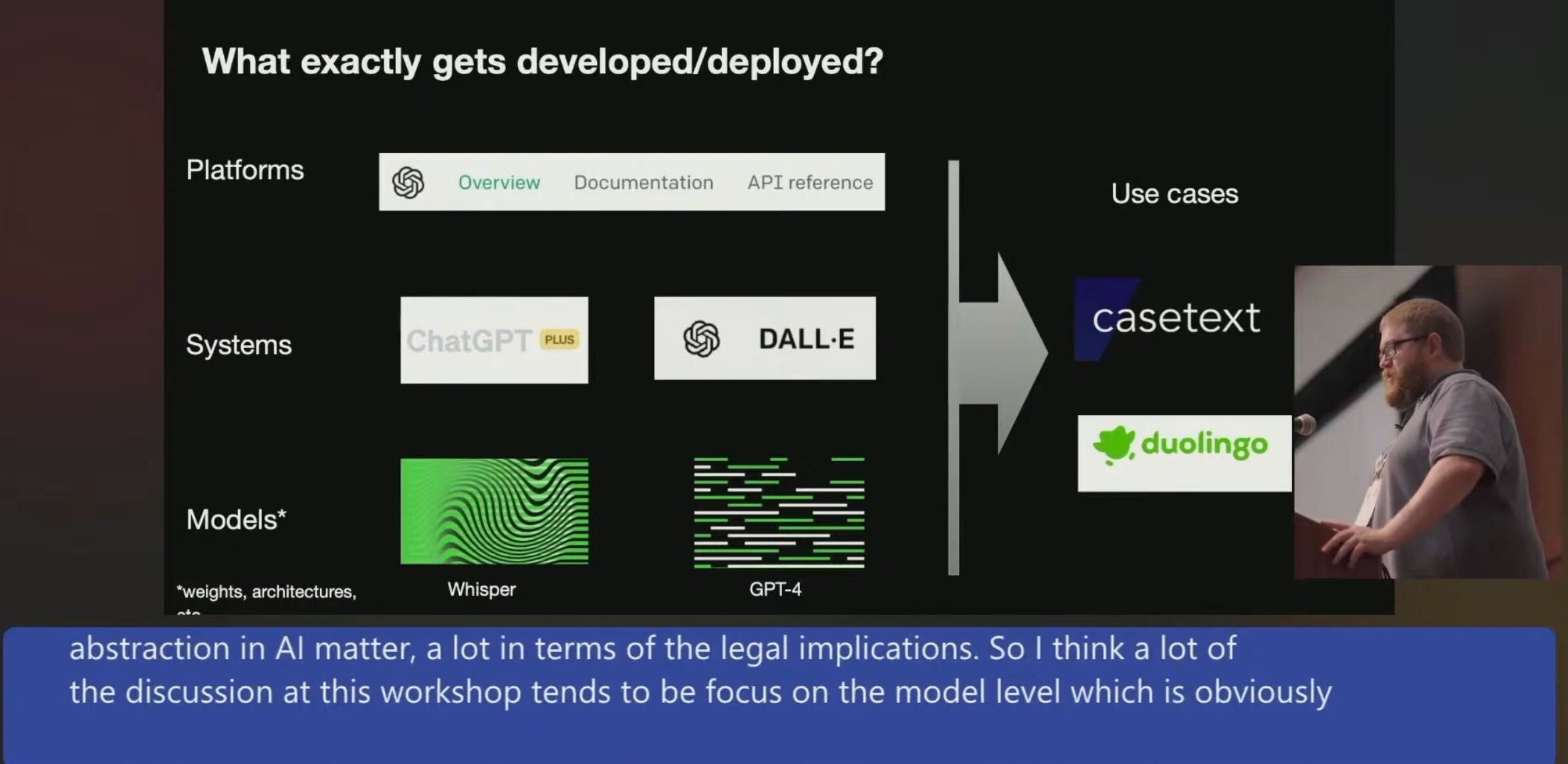

(slide by Miles Brundage, OpenAI)

(slide by Miles Brundage, OpenAI)

The key principles for exact computation were established by this paper by Microsoft Research and can be attained in different ways, depending on the AI System or Platform used:

-

In ChatGPT, prompt to use the “Code Intepreter”. The success indicator is in the blue “[>_]” icon. Restriction: use limits of Code Interpreter are low on the free version, and log-in is required.

-

In the OpenAI Playground, use the Assistant rather than Chat, and be sure to enable the Code Interpreter slider. Same prompt as with ChatGPT, Code Interpreter execution is very prominent there. Use only limited by the assigned Usage Tier.

-

For Anthropic Claude via Amazon Bedrock, I have added “Python Use” as an experimental feature to my Workbench: “Python Use”. There is quite some trickery behind the scenes, but it roughly works the same as Code Interpreter - just much more restricted. (Not sure if this is the Ultimate Best™️, we’ll see…).

As a practical application, Python-Use enables computing precise RGB values for tints based on a style guide excerpt, which can then be used to colorize an SVG image (inspired by the ChatGPT Business webinar).