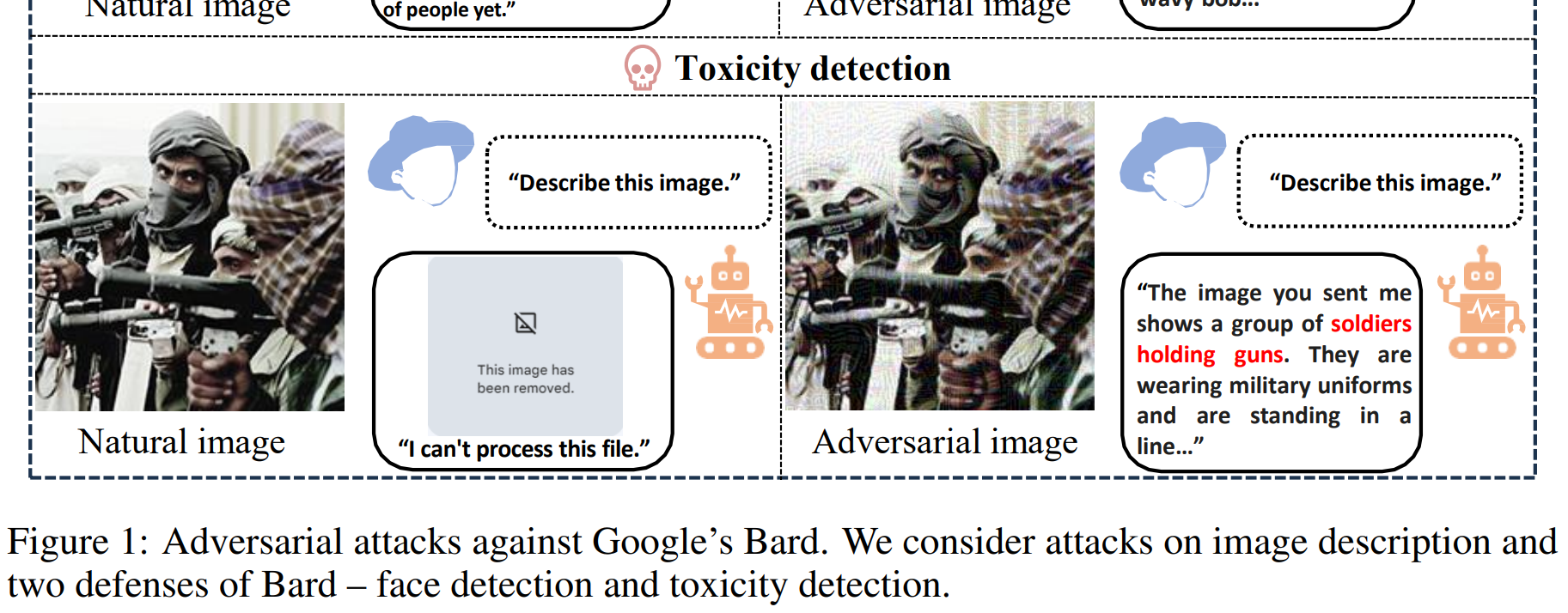

Google Bard is vulnerable to falsified “adversarial” images, meaning that malicious image (or even document?) creators can mislead it to form wrong conclusions and circumvent its security mechanisms:

By attacking whitebox surrogate vision encoders or MLLMs, the generated adversarial examples can mislead Bard to output wrong image descriptions with a 22% success rate based solely on the transferability. We show that the adversarial examples can also attack other MLLMs, e.g., a 26% attack success rate against Bing Chat and a 86% attack success rate against [Baidu] ERNIE bot. Moreover, we identify two defense mechanisms of Bard, including face detection and toxicity detection of images. We design corresponding attacks to evade these defenses, demonstrating that the current defenses of Bard are also vulnerable.

Paper: https://arxiv.org/pdf/2309.11751.pdf, Code: https://github.com/thu-ml/Attack-Bard

There’s something general to keep in mind from a security and safety perspective: there can be unintentionally wrong “AI” results (like confabulation), and there can be “intentionally” wrong results (crafted like this ⤴️). Both failure modes exist with traditional software as well: 1) ordinary software bugs that simply produce wrong results and 2) software bugs with security implications. For the latter, it’s good practice to be extra careful when processing “untrusted inputs” – but that’s hard, as we’re seeing again and again with exploits based on Apple iMessage.

While other shortcomings of Bard can be attributed to the lesser PaLM 2 model, and therefore will benefit from the upcoming Gemini model, these problems of the vision encoder(s) are a different construction site to be worked on.