Reports about “AI getting dumber” are making international headlines in daily newspapers and professional outlets alike. I’m convinced that this is not what has happened. Rather, it’s a bugfix gone wrong. I liked to say that no matter how low-effort your inquiry is, GPT-4 will always come back with a good answer (🇩🇪 “Du kannst da unqualifiziert ranherrschen wie Du willst…”). Not anymore: GPT seems to have been finetuned to align the answer with the “question”.

It hasn’t, @OpenAI has simply tuned GPT-4’s response to match the caliber and quality of the prompt. GPT-4 will only give good responses if the prompt shows deep understanding and thinking. This is how they’re making it a personalized tool for all.

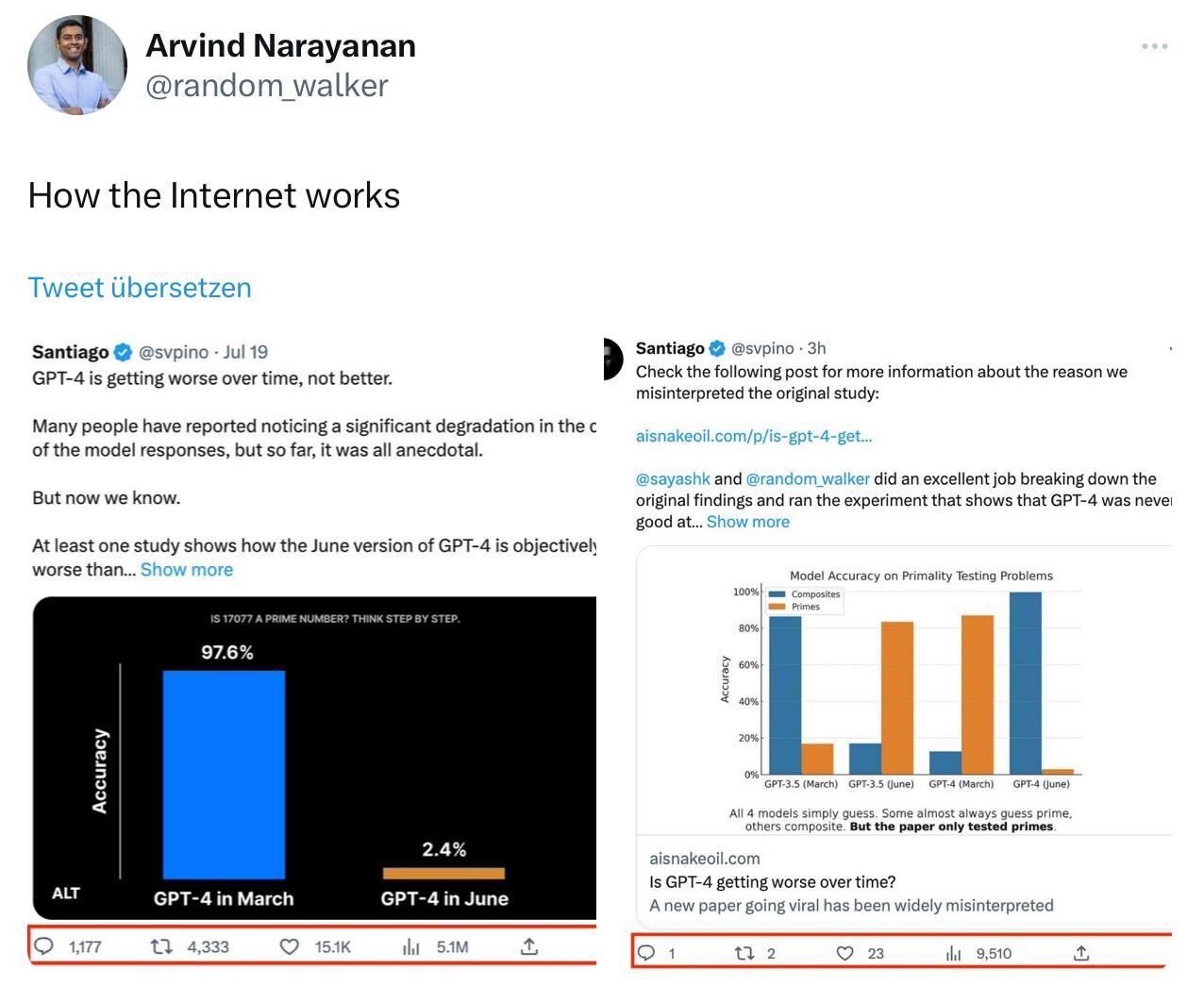

Why is this not widely known? Well:

Or as one commenter put it: “we’re not seeing a degradation. It’s a behavior change”

So the solutions to “bad” answers is to pose better questions. 🙂

What one German newspaper (Süddeutsche Zeitung) offers as an explanation: models are getting trained on their own output, now that this litters the web. I don’t buy it. My response to this on our corporate internal chat (translated by GPT-4):

The mentioned “feedback loop” may make intuitive sense, but I don’t see it as technically substantiated. On the contrary, the literature reports catastrophic collapse of certain subcomponents in this case. I have not yet seen this quantified, but it would initially be assumed that the routinely recorded key figures show corruption during training with the means already used today.

While skimming the LLaMA 2 paper, I picked up that the successes are attributed to a rigorous quality control of the supplied content: “Quality is all you need” (as a side swipe at the title of the original Google paper “Attention is all you need”)

So this would rather be an example of “journalists hallucinating”. 😉

And yes, after reading more into it, GPT and architecturally similar models are very sensitive and prone to dementia. What the journalists describe there simply wouldn’t happen to professionals in the field - one would assume. 😉