My Chatbot interface for OpenAI models (Live demo) has gotten a few improvements lately:

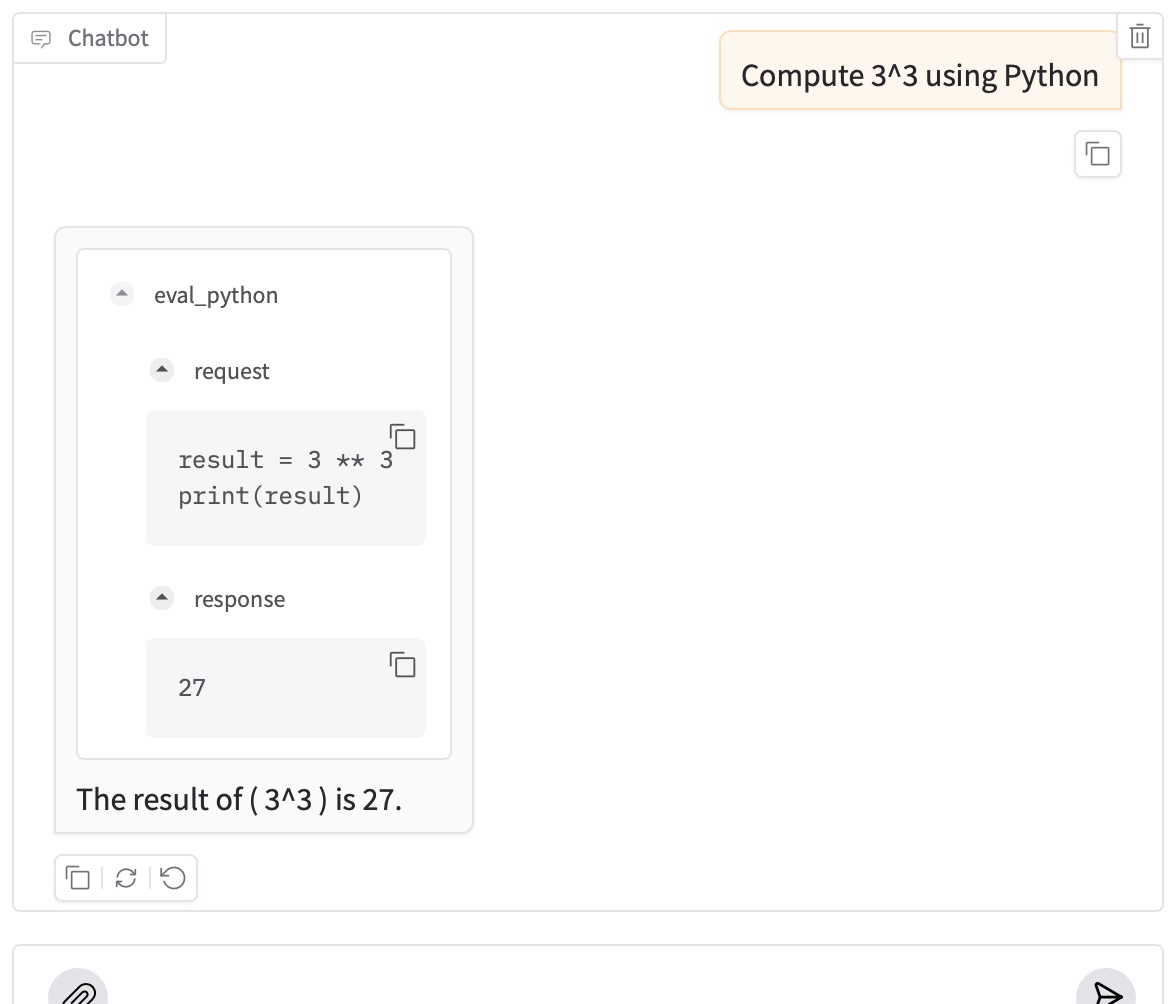

- Tool-Use presentation:

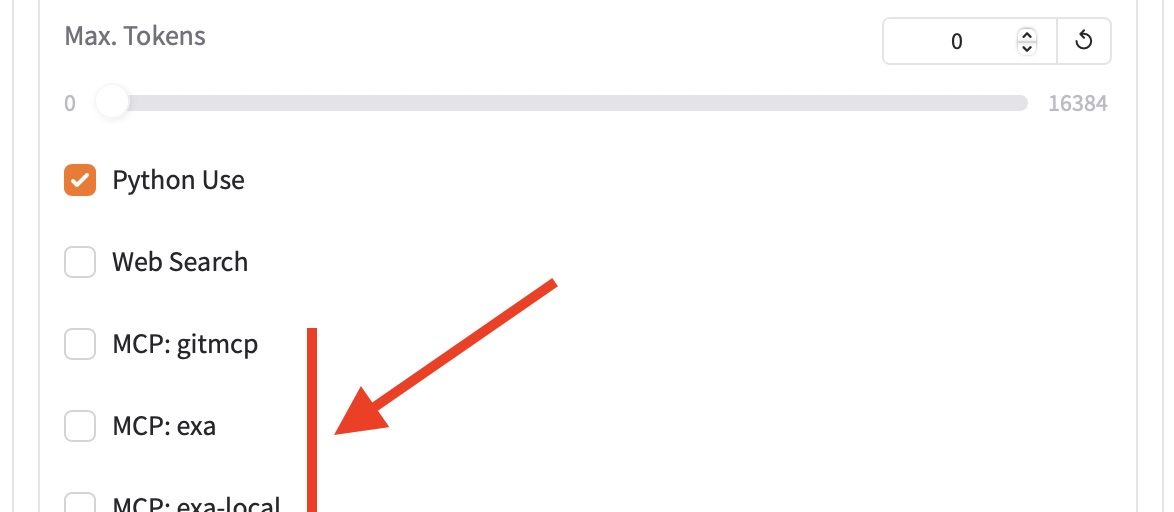

- Support for MCP Servers (aka “Model Context Protocol)

- registration to be done in mcp_registry.json, loosely follows the Visual Studio syntax

- sample in mcp_registry.sample.json

- MCP Servers to be used can be selected/deselected like traditional tools

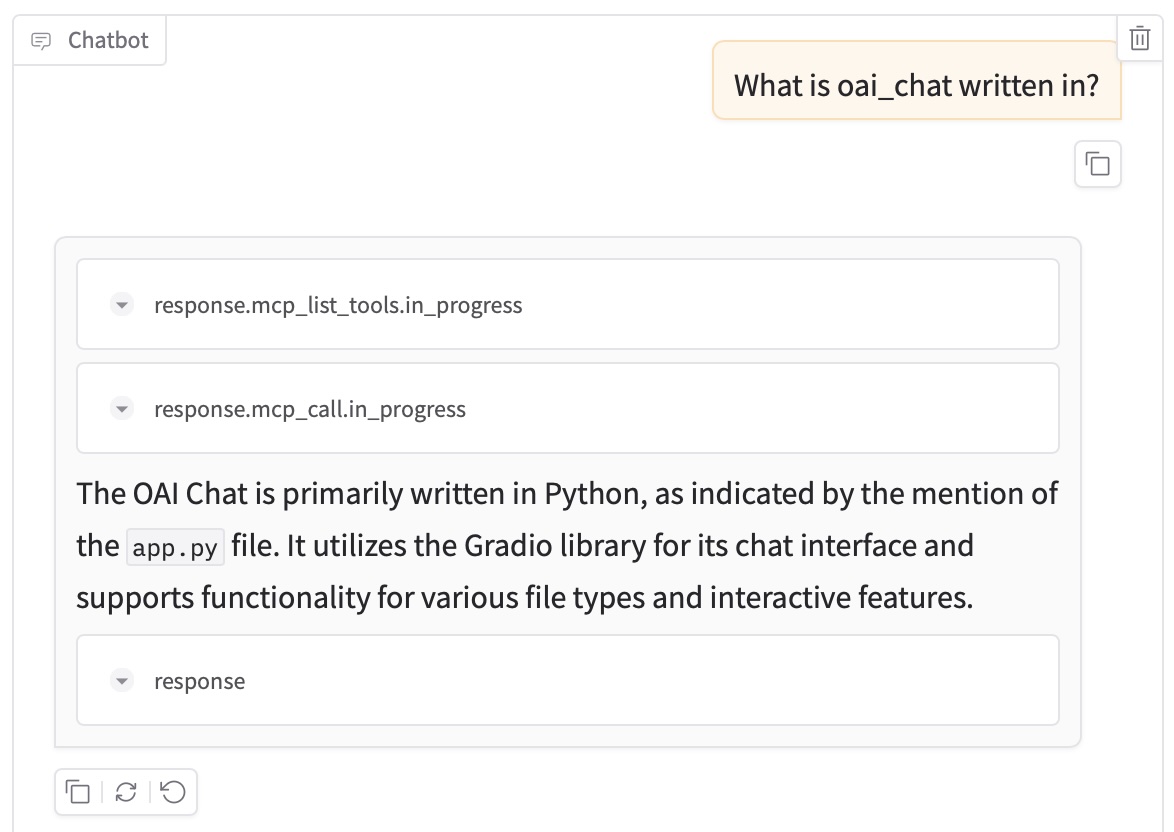

- Remote MCP Server integrations are handled from the OpenAI backend directly: example with the gitmcp MCP server for oai_chat:

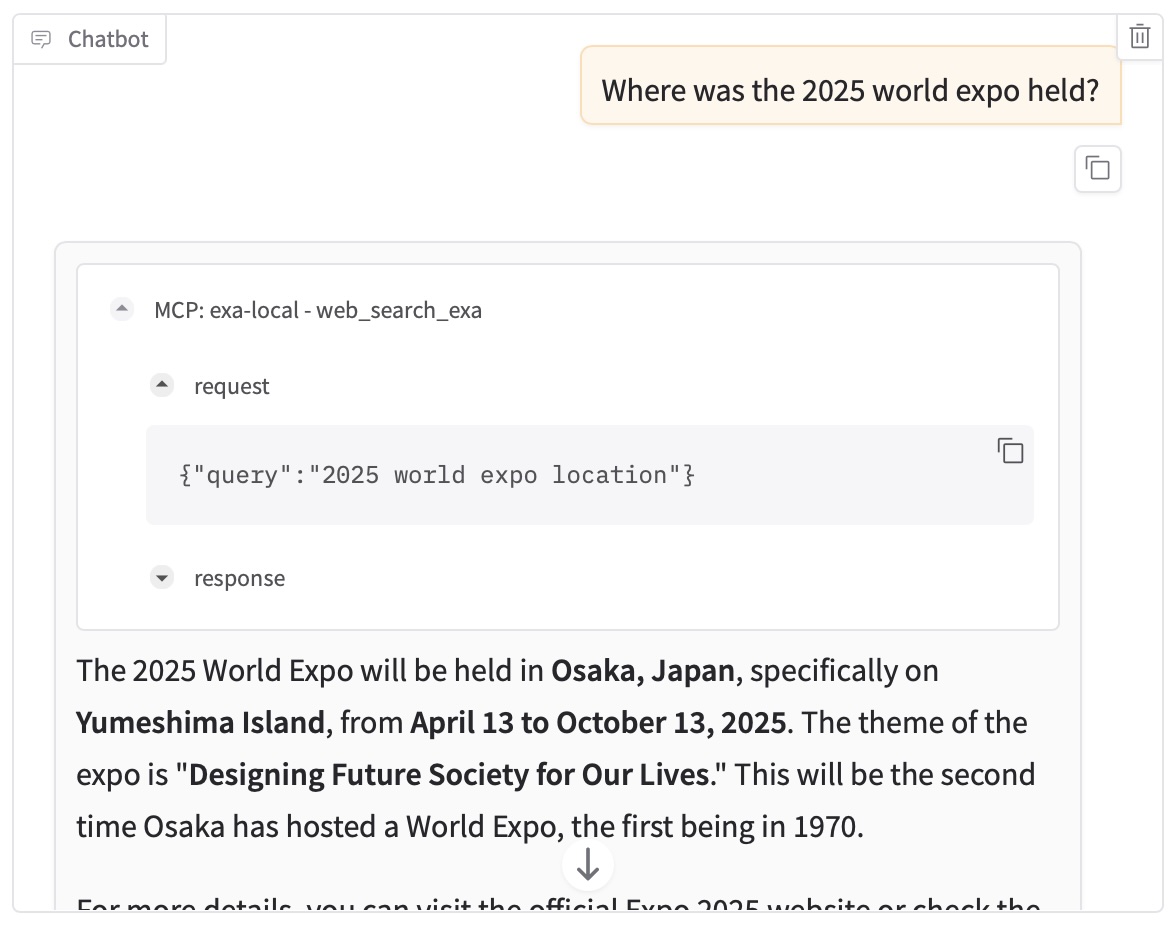

- Local MCP Server integrations (stdio) are not routed through OpenAI, but are called locally. Web search with Exa:

(their remote MCP server appeared flaky recently, so I decided to make sure the MCP server run locally works) - (not available on HuggingFace live demo)

- registration to be done in mcp_registry.json, loosely follows the Visual Studio syntax

- UnrestrictedPython: as an opposite to the RestrictedPython that allows the LLM to execute code in a sandbox, setting the environment variable

CODE_EXEC_UNRESTRICTED_PYTHON=1lifts the sandbox and allows arbitrary code execution. Know the risks. - Reasoning tokens are now fed back into reasoning models. OpenAI claim performance improvements that way, e.g. on SWE-bench of 3%

- sets the OpenAI API token to use automatically if OPENAI_API_TOKEN is set

- … either through the environment or .env

Philipp Schmidt, AI Developer Experience at Google DeepMind, recently announced his “Code Sandbox MCP”, a simple code interpreter for AI agents. This is based on the LLM Sandbox project. Either could be an avenue for future improvement.

[Update 2025-08-07] I have added basic support for GPT-5, including GPT-5 Regular, GPT-5 Mini, and GPT-5 Chat/Main.