Updates on Document-Processing:

- long context models Claude-2 100K and GPT-4-32K have landed on my desk. Huge thanks 🙏🏻 to the benefactors!

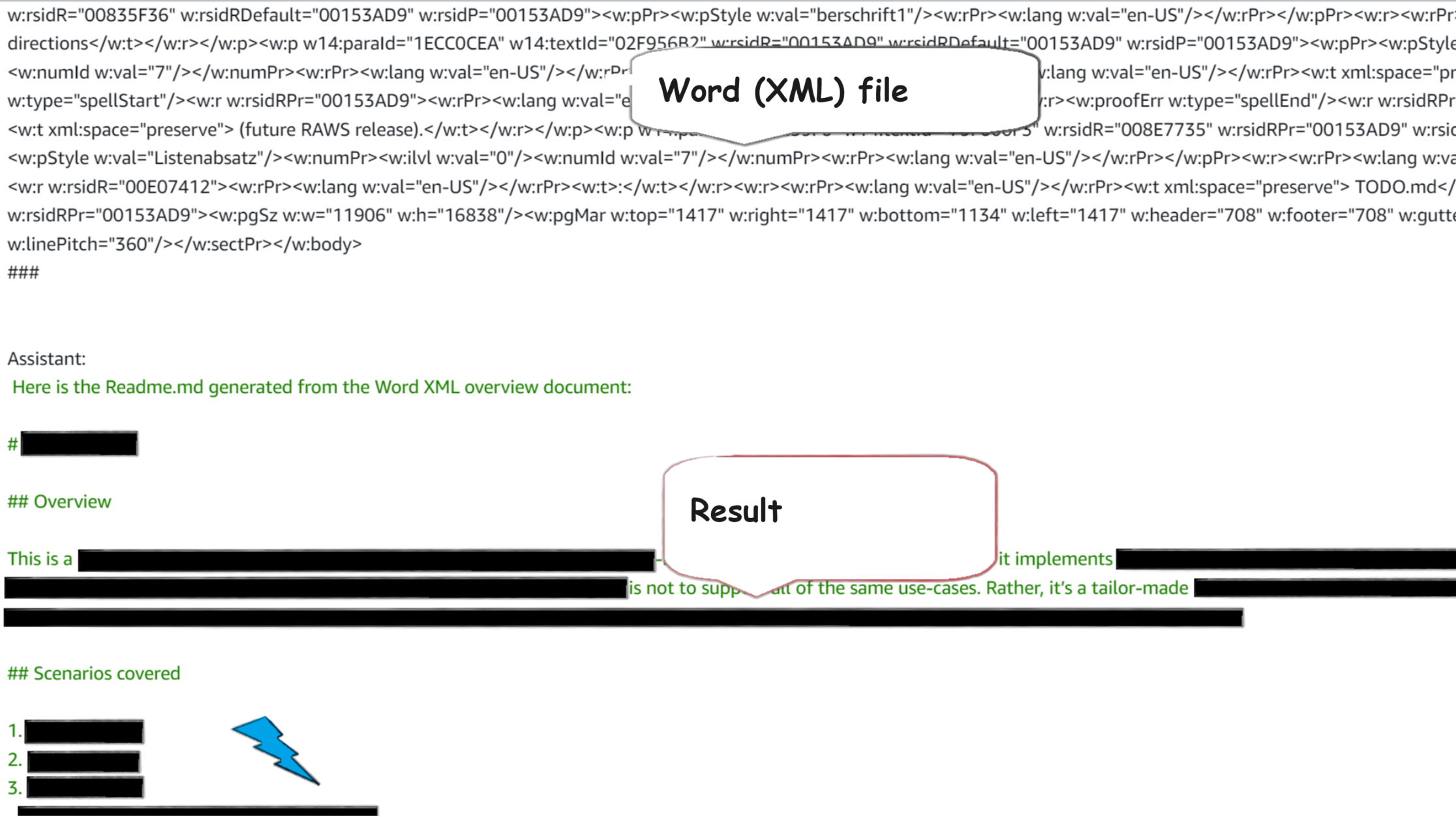

- My first use-case: convert a project overview I had foolishly written in Word to plain text Markdown, to grace my Bitbucket source code repository. I saved the Word document to the “Word XML document (*.xml)” file type, pasted this verbatim to the Playground, and let Claude-2 do the work (see screenshot). Because there is a lot of non-payload noise in Word-XML, this naive approach works only for rather short documents - and seems expensive. Mistake: my emoji bullet points (✅/❌) got mis-represented as numbered list (blue flash in screenshot). Potential future work: 1) figure out how to clean Word-XML of anything non-essential, 2) will Claude-2 also understand advanced formatting, like columns and tables? 3) simply pasting a (compressed) PDF did not work 🤡, but what might?

- The team caught the (infinite document-length) Aleph Alpha Summarizer hallucinating on a document. This was with a position paper on eID by Bankenverband, a representative body for private commercial banks in Germany. The summarizer hallucinated that a core demand was a “blockchain-based identity”. The mistake was caught by a colleagues’ follow-up question of Why? and Claude-2 clarifying, based on the full document text: “The text does not mention that the Bankenverband wishes the digital identity system to be blockchain-based. The text focuses on aspects like user-friendliness, security levels, interoperability, and market acceptance, but does not advocate for a specific technology like blockchain to be used for implementing the digital identity system. The technical architecture is not specified as a key priority by the Bankenverband.”

- Note displayed in the Playground: “This endpoint is deprecated and will be removed in a later version. New methods of processing Q&A tasks will be provided before this is removed.”

- (I used GPT-4-32K for the final summary to be used; this one was linguistically nicer than the Claude-2 result)

- … which reminds of a question I always forget to ask: how might we go about getting evaluations/comparisons from real humans? There seems to be mounting evidence that automated evals/scores just don’t really work and MTurk clickworkers just use ChatGPT nowadays.

- Paper by Meta AI and Princeton researchers: “Walking Down the Memory Maze: Beyond Context Limit through Interactive Reading”: Despite attempts to extend the context window […], long-text understanding continues to be a challenge. We propose an alternative approach which instead treats the LLM as an interactive agent, allowing it to decide how to read the text via iterative prompting. We introduce MEMWALKER, a method that first processes the long context into a tree of summary nodes. Upon receiving a query, the model navigates this tree in search of relevant information, and responds once it gathers sufficient information. On long-text question answering tasks our method outperforms baseline approaches that use long context windows, recurrence, and retrieval. We show that, beyond effective reading, MEMWALKER enhances explainability by highlighting the reasoning steps as it interactively reads the text; pinpointing the relevant text segments related to the query.

- There is certain overlap with PDFTriage published by Adobe Research highlighted earlier.